Are Dataframes better than Spark SQL ?

Half-knowledge is worse than ignorance. Thomas B. Macaulay

Since there is a lot of noise on the internet for the battle between dataframes vs spark.sql I was also at one point forced to believe that dataframes are always more performant than the query you write in spark.sql as dataframes go through the Catalyst Optimizer. But wait dataframes and SQL both go through optimizations then which one to choose when for the performance factor?

In reality, as long as both these approaches create the same query plan it doesn't matter whether you write. Let's verify this with the help of an example.

Let's say I want to update a given list of columns to an existing data frame.

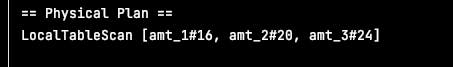

val amountsDF = Seq( (100,200,300), (-10,-20,-30), (50,-60,-70) ).toDF("amt_1", "amt_2", "amt_3") val columns = List("amt_1", "amt_2", "amt_3") val df = columns .foldLeft(amountsDF)( (tempDF, col) => tempDF.withColumn(col, when($"$col" < 0 , 0).otherwise($"$col")) ).explain()

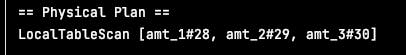

// same operation with sparl.sql amountsDF.createOrReplaceTempView("amounts_t") spark.sql( """ | select | if(amt_1 < 0 , 0, amt_1) amt_1, | if(amt_2 < 0 , 0, amt_2) amt_2, | if(amt_3 < 0 , 0, amt_3) amt_3 | from amounts_t |""".stripMargin).explain()

As both generating the same query plan, it's more of writing choice than a performance impact. Using dataframes or spark.sql becomes a personal choice. Whenever in doubt just check the query plan, offcourse the actual code that you are going to write won't be this straightforward but no harm in getting into good habits.